We started the lecture by asking how to spell "Arithmetic". Unlike "Mathematics", arithmetics is spelled with an "e" after m. Arithmetics deals with the four basic operations.

In Huffman Coding, each char has an explicit code. In Arithmetic Coding (AC) characters do not have codes. A whole file is compressed into a single decimal number. In reality, the file (ie. sequence of chars) is compressed to a range of numbers. The encoder sends a number to the decoder which is inside the range and has the minimum number of bits. One version of AC is such that the decoder keeps narrowing the range such that the number is always in the range and stops when it decodes enough chars.

The width of the range that corresponds to all possible sequences is 1. As we code more and more chars, the range gets narrower. The width of the range is equal to the probability of the sequence that is decoded so far.

Here is one of the examples we did in class.

AC gets really near the Shannon Limit. AC in a way uses a fractional number of bits per char. Because of its superiority, we can get decent results even if we do not use LZ-type preprocessing. Nevertheless, that type of preprocessing to find repeatng patterns can also benefit AC.

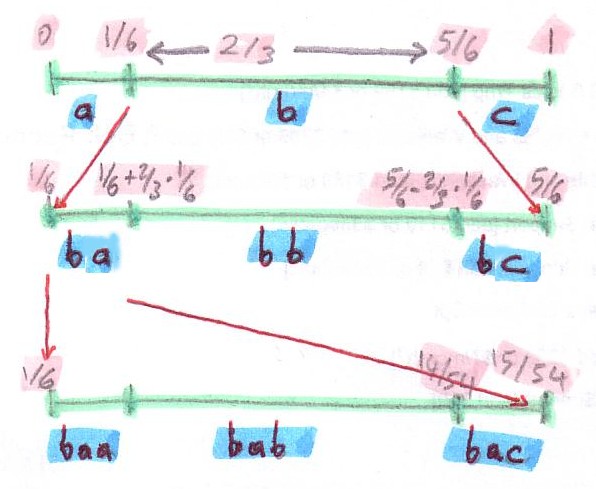

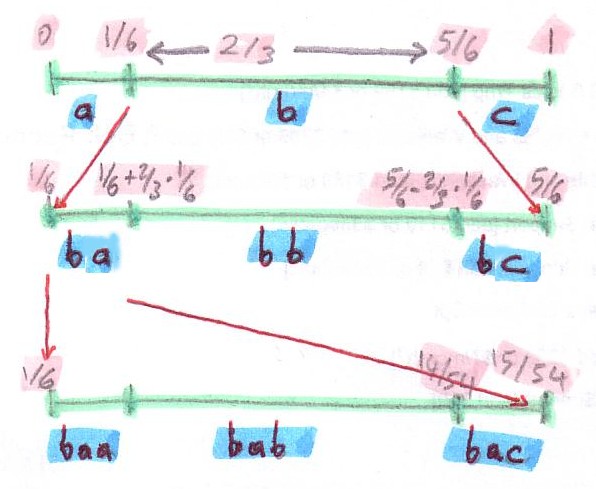

Exercise: Let's say we only have a, b, c in our file

with respective probs of 1/6,2/3,1/6. Let's say we have bac in the

file. What do we send to the AC decoder?

Answer: [0,1) corresponds to all the possible

sequences. Zero included. One is excluded. Square bracket means

included. Paranthesis means excluded. That is [.000...,.111...] in

binary. Notice the decimal point on the left.

See the below picture that pictorially explains the above steps.

Exercise: Calculate the Shannon limit for the above

example.

Answer: The Shannon limit is 1.252. That means

Shannon says you cannot code this file with less than 1.252 bits per

char on the average.

With AC, we coded 3 chars with 5 bits. That is 1.667 bpc. If we did Huffman coding b would get assigned 0, and a and c would respectively get 10 and 11. And we would still get 5 bits for those 3 chars. On the average Huffman coding would get a bpc of 1.667. Do this as an exercise. Note that Shannon Limit does not take alphabet change (hence repeating patterns into account). AC approaches Shannon's limit for very long files. Sometimes for very short sequences AC may look like it beats the Shannon limit. However, that is misleading because when you take the header info into account, it never achieves a bpc lower than Shannon's limit.

It is even possible we may seem to achieve a zero bpc in AC. Let's say the sequence we code in the above example is 1000 b's. This will correspond to a very small range around 0.5 and will look like [.01111...,.10000...). The lower and upper bounds have zero bits in common and hence we send no bits. The decoder assumes we sent .01111.... which is exactly 0.5. However, it is not true that we send no bits, we always send header bits. One of the other reasons AC or any other coding technique may seem to surpass Shannon's limit is because in that specific case you may be sending a sequence which violates the probability distribution. If we are sending 1000 b's, it seems as if the prob. of b is 1 -- not 2/3. However, we assumed a non-zero prob. for a and c.

The kind of AC we talked about here is an AC which preprocesses the whole stream to be sent. It figures the prob.s and the length and only after that it codes the stream. In practice, AC is often used in true streaming applications where you do not know the length ahead of time -- nor the probs. The encoder has to code and send the stream of chars as they arrive. The decoder is also supposed to decode chars as they come and it does not know the length either. In these cases a special EOF char is used. The prob.s are initialized to equal prob.s by both the encoder and decoder. They both use the same prob. update algorithm. The prob. estimation part of Adaptive AC and Adaptive Huffman Coding are based on the same principles.

Reading materials:

Other AC sources: ref1,

ref2, ref3,

ref4.